Overview

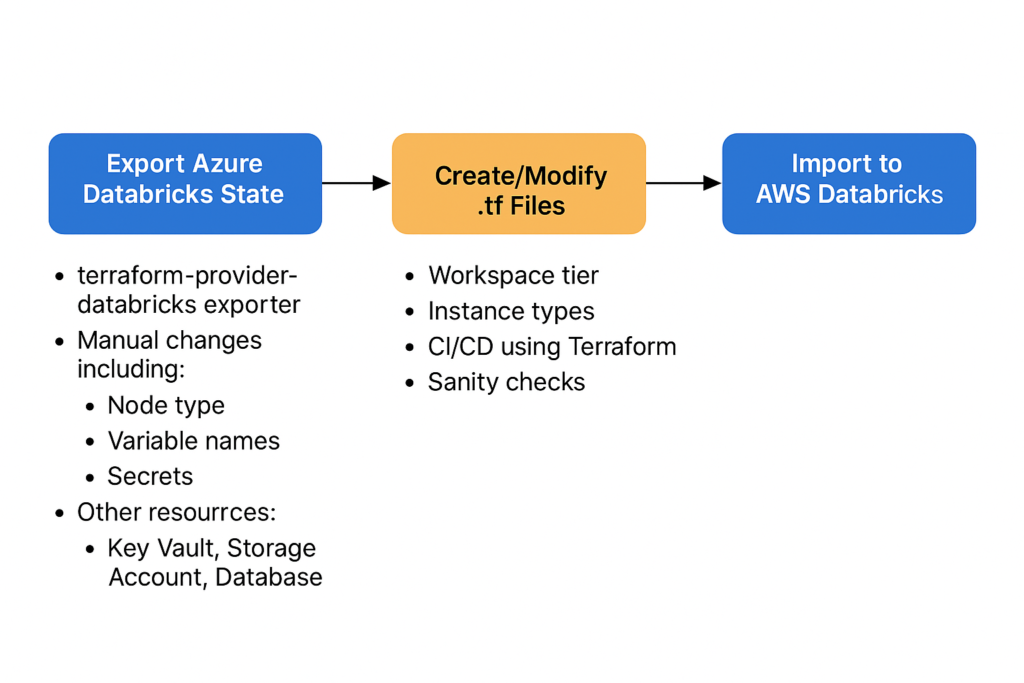

This blog post outlines a Proof of Concept (PoC) for migrating Databricks resources from Azure to AWS using Terraform. While still requiring some manual adjustments, this approach simplifies much of the resource migration using the experimental exporter from the Terraform Databricks Provider.

We also touch upon best practices, additional resource migrations (like Key Vault and Storage), and CI/CD integration using Terraform.

Why Migrate Databricks Across Clouds?

Organizations often migrate between cloud providers due to cost optimization, data residency, scalability, or enterprise strategy. Recreating complex Databricks workspaces manually is not efficient. That’s where infrastructure as code (IaC) and tools like Terraform come in.

Challenges in Migration

Resource Compatibility & Configuration Drift Not all Databricks resource definitions are 1:1 compatible across Azure and AWS.

Fields like node_type_id, instance_pool_id, runtime_versions, etc., must be manually mapped or updated. ā 🔐 Secrets Management Transition Azure Key Vault and AWS Secrets Manager/KMS have different APIs, configurations, and access policies.

You must refactor secrets references in jobs, notebooks, and pipelines carefully to avoid runtime failures.

📂 Storage & Mount Point Migration Migrating from Azure Data Lake Storage (ADLS) to Amazon S3 requires changes in:

Mount scripts (wasbs:// → s3a://)

IAM roles, trust policies, and credentials

Data migration tools (like AzCopy, AWS CLI, or DataSync) need validation for data completeness and integrity.

📘 CI/CD Pipeline Disruption Existing DevOps pipelines tied to Azure-specific APIs or services may need to be restructured.

Secrets, Terraform backends, and workflows must be refactored and tested in the new environment.

🛠️ Tooling Limitations The Databricks resource exporter is still experimental and doesn’t support 100% of resources.

💰 Cost Modeling & Forecasting AWS and Azure pricing structures differ significantly (compute, storage, egress).

Without a proper forecast model, businesses may experience unexpected cost surges.

🔄 Change Management Shifting to a new cloud means retraining teams, updating SOPs, and preparing users for changes in UI/UX and access control mechanisms.

📉 Downtime & Migration Risk Any errors in resource replication or configuration can lead to job failures or data unavailability.

Mitigating this requires a well-planned parallel run or staged cutover.

Some components (like MLflow artifacts, workspace settings, dashboards) might need manual recreation or scripting via REST APIs.

Migration Process

Step 1: Setup Terraform for Azure Databricks

Create a Terraform configuration folder:

versions.tf

terraform {

required_providers {

databricks = {

source = "databricks/databricks"

version = "1.5.0"

}

}

}azure_databricks.tf

provider "databricks" {

host = ${$DATABRICKS_HOST}

token = ${DATABRICKS_TOKEN}

}Initialize Terraform:

terraform initEnsure the terraform-provider-databricks executable is in the same directory.

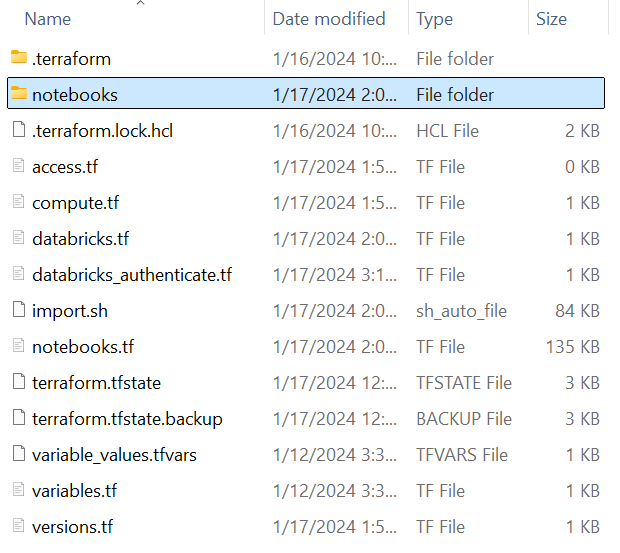

Step 2: Export Azure Resources

Run the exporter tool:

terraform-provider-databricks exporter \

-skip-interactive \

-services=groups,secrets,access,compute,users,jobs,storage,notebooks \

-listing=jobs,compute,notebooks \

-last-activedays=90 \

-debugThis generates Terraform HCL files for:

- Notebooks

- Clusters

- Jobs

- Secret scopes

- IP access lists

- Groups, Repos, Mounts, etc.

Manual Adjustments

After export, the following manual edits are typically required:

Step 1: Cluster Node Type Adjustments

- Azure:

Standard_DS3_v2 - AWS: use

r5d.large,i3.xlarge, etc.

Check Databricks AWS supported instance types to choose appropriate types.

2. Workspace Tier Compatibility

- Premium features (like access control or credential passthrough) require Premium tier workspaces.

- Double-check permissions, policies, and cluster features used.

3. Secrets Management

If secrets were stored in Azure Key Vault:

resource "databricks_secret_scope" "example" {

name = "example-scope"

initial_manage_principal = "users"

backend_type = "AZURE_KEYVAULT"

keyvault_metadata {

resource_id = "..."

dns_name = "..."

}

}

Replace this with AWS Secrets Manager or AWS KMS:

resource "databricks_secret_scope" "example" {

name = "example-scope"

initial_manage_principal = "users"

}

Then upload secrets using CLI or API.

4. Storage Mount Points

- Change

wasbs://orabfss://mounts tos3a:// - Update IAM roles and instance profiles accordingly.

Migrating Non-Databricks Dependencies

1. Key Vault to AWS Secrets Manager

- Export secrets from Key Vault using Azure CLI or scripts.

- Recreate them in AWS Secrets Manager or Systems Manager Parameter Store.

2. Storage Accounts

- Migrate data using AzCopy, AWS CLI, or tools like AWS DataSync.

- Update mount logic accordingly in Terraform and notebooks.

3. Databases (e.g., Azure SQL to Amazon RDS)

- Use DMS (Database Migration Service) or schema export/import tools.

Deploying to AWS Databricks

Create a new Terraform folder for AWS:

aws_databricks.tf

provider "databricks" {

host = var.DATABRICKS_HOST

token = var.DATABRICKS_TOKEN

}

Copy over exported .tf files (with edits) and run:

terraform init

terraform plan

terraform applySanity Checks Post-Migration

- Validate all jobs and clusters are running.

- Check notebook content and mounts.

- Verify secret scopes and access controls.

- Confirm integration with data sources (S3, RDS, etc.)

CI/CD for Databricks Using Terraform

Suggested Structure

- Use a Git-based workflow (e.g., GitHub, GitLab)

- Use Terraform Cloud or CI pipelines for deploy

Sample CI Pipeline Steps

- Lint and format check

terraform initterraform validateterraform plan- Manual approval step

terraform apply

Use workspaces or variables to separate environments (dev, prod).

Summary

Migrating Databricks from Azure to AWS is achievable using Terraform and the experimental resource exporter. With some manual tweaks to instance types, storage mounts, and secrets, you can replicate your entire workspace across clouds.

Key Benefits:

- No manual recreation of resources

- Repeatable and version-controlled infrastructure

- CI/CD integration for environment promotion

While the exporter tool is still evolving, it significantly reduces the effort needed for cross-cloud Databricks migration.